I recently had the occasion to look into securing access to an AWS S3 bucket. Although the subject can seem simple, there are different steps to cover in order to implement it properly. Let’s jump in!

Objective

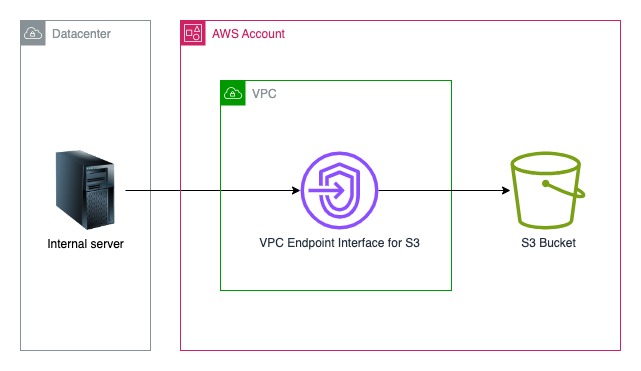

We have an AWS S3 bucket that needs to be accessed from an on-premise server. Objects on S3 bucket are encrypted with a KMS key. This server has no IAM identity, so we had to create an IAM user in order to call the S3 API.

Goal: We want to reduce to the minimum the impact of a compromised access key. We will to restrict access for the specific IAM identity that needs bucket access, so that it can only do the authorized actions on the bucket S3 and nothing else. Additionaly, we want it to access the S3 bucket only from on-premise server. In other words, the use of the AWS key is useless if not made from the trusted servers.

What does AWS say about IAM users:

IAM best practices recommend that you require human users to use federation with an identity provider to access AWS using temporary credentials instead of using IAM users with long-term credentials.

In the following we will use the term IAM identity that covers IAM users or IAM roles. All the recommandations given apply to both: the most secure way is to give the IAM permission to the IAM role and to allow the IAM user to assume the role. This way, the S3 bucket actions are made from temporary credentials gathered with AWS STS.

Establishing a Data perimeter

In order to protect the S3 bucket, we will implement a technique known as Data perimeter. In short, it covers the actions taken to restrict the IAM identities that can make AWS request (Who I trust to access the bucket), and to restrict the location from which the AWS requests will be made (network perimeter). You can find additional resources and examples here.

We will use several techniques to achieve that:

• A VPC interface endpoint is used to communicate with the S3 service.

• A Security Group (SG) is configured to protect the VPC Interface Endpoint and only allow known on-premises server IP addresses.

• The VPC Endpoint policy must be configured to only allow necessary actions, authorized identities, and specify the resources.

• The bucket policy only allows traffic that come from the VPC Endpoint (except for specific cases as we will see).

• Depending on the type of application, logging S3 requests may be necessary. Currently, when S3 logs are enabled, they must be sent to an S3 bucket that uses default SSE-S3 encryption with AES-256.

Using an S3 Bucket: observations

Usage within an AWS account: (apart from the presence of a deny): An explicit allow in an IAM identity-based policy OR in a bucket policy is sufficient to grant access to the bucket (even if no permission is present on the “identity side”).

➔ Consistency is needed regarding the “side” where the permissions are placed: identity-based is recommended, which provides the opportunity to implement a data perimeter protection on the resource-based policy side.

Bucket policy: AWS account as principal:

{ "Principal": "AWS": "arn:aws:iam::<AWS account>" }

Putting an AWS account as the principal in a bucket policy (or a resource policy in general) is NOT an explicit Allow (it does not alone grant access): It delegates access to the resource via IAM permissions on the identity-based policy for the specific AWS account.

- Any IAM identity of the account will be able to perform the associated action if it is present in the identity-based policy (and covers the concerned resource).

- The Principal is not necessary for intra-account access on a resource-based policy if we handle IAM permissions on the identity-based policies side.

NB: These elements are also applicable to other resource-based policies (not just bucket policy).

KMS Encryption: observations

-

Permissions on IAM Identity side: Having s3:* permissions as an IAM identity is not sufficient to read/write in a bucket encrypted with a KMS key. You also need permissions to encrypt/decrypt objects.

-

Envelope Encryption: Only the kms:GenerateDataKey and kms:Decrypt permissions are necessary to read/write in an S3 bucket.

-

Enabling Default Encryption with KMS on a Bucket: New objects are encrypted with a KMS key, with no impact on existing objects. The same principle applies to key rotation: the compromise of a KMS key is not resolved by key rotation.

-

KMS Key Encryption: KMS key encryption is not enforced by default when adding an object. A client can specify another encryption method:

aws s3api put-object --bucket example-s3-bucket1 --key object-key-name --server-side-encryption AES256

Example of a condition to enforce encryption in a bucket policy:

"Condition": {

"StringNotEquals": {

"s3:x-amz-server-side-encryption-aws-kms-key-id": "<KMS_KEY_ARN>"

}

}

Data perimeter: observations

Resource-based Policy with Deny: Beware of Self-lockout! Ensure to thoroughly check the policy before an update: in case of a lockout, it is necessary to use the root account, which is not affected by the effects of “deny,” to “fix” the policy.

Multiple Conditions in a Deny Statement: If at least one condition is not met, then the condition does not apply. It is eqquivalent to say that all conditions must be true for the deny to apply.

Condition aws:sourceIP: This only works for public IP addresses. Solution: Do CIDR restrictions via a security group rule.

IfExists in a Condition Operator:

"Condition": {

"StringNotEqualsIfExists": {

"aws:PrincipalOrgID": "<my-org-id>",

"aws:PrincipalAccount": [

"<account-id>"

]

}

}

Questioning the Usefulness of “IfExists” in a Deny-based Policy:

If you are using an “Effect”: “Deny” element with a negated condition operator like StringNotEqualsIfExists, the request is still denied even if the tag is missing.

Because of this, we advise not to use ifExists in this context in order to avoid confusion. Remember: a restriction to aws:PrincipalOrgId will always be better that no restriction at all!

IAM policy

Here is the IAM policy that we will use for our IAM identity that needs access to the S3 Bucket (IAM role or user):

{

"Statement": [

{

"Action": [

"s3:PutObject",

"s3:ListBucket",

"s3:GetObject",

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::<BUCKET_NAME>/*",

"arn:aws:s3:::<BUCKET_NAME>"

],

},

{

"Action": [

"kms:GenerateDataKey",

"kms:Decrypt"

],

"Effect": "Allow",

"Resource": "arn:aws:kms:<RGION>:<ACCOUNT>:key/<KEY_ID>",

}

],

}

Limiting Actions: Note that IAM permissions do not necessarily correspond to the actions that the identity can actually perform (an explicit allow on a resource-based policy is sufficient for intra-account AWS access).

Solution: use a permission boundary to restrict the actions that can be performed.

Permission boundary

Order of Evaluation of IAM Policies: If we look at AWS docs, wen can see that the evaluation of the permission boundary occurs after the sequence resource-based > identity-based policy (except when there is an explicit deny).

➔ If there is an explicit allow on the target resource side, then the permission boundary has no effect (unlike an Service Control Policy which is checked before a resource-based policy).

➔ It is necessary to use deny statements to restrict access. We specify the necessary permissions, then use deny statements to allow only the specified actions.

Here is the IAM policy that we will use for the permission boundary:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:ListBucket",

"s3:GetObject",

"s3:PutObject",

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::<BUCKET>",

"arn:aws:s3:::<BUCKET>/*"

]

},

{

"Action": [

"kms:Decrypt",

"kms:GenerateDataKey"

],

"Effect": "Allow",

"Resource": [

" arn:aws:kms:<REGION>:<ACCOUNT>:key/<KEY_ID>"

]

},

{

"Effect": "Deny",

"Action": "*",

"NotResource": [

"arn:aws:s3:::<BUCKET>",

"arn:aws:s3:::<BUCKET>/*"

" arn:aws:kms:<REGION>:<ACCOUNT>:key/<KEY_ID>"

]

},

{

"Effect": "Deny",

"NotAction": [

"s3:ListBucket",

"s3:GetObject",

"s3:PutObject",

"kms:Decrypt",

"kms:GenerateDataKey"

],

"Resource: "*"

}

]

}

Note the last 2 “deny” statements: we can’t properly deny in a single statement like the following:

{

"Effect": "Deny",

"NotResource": [

"arn:aws:s3:::<BUCKET>",

"arn:aws:s3:::<BUCKET>/*",

"arn:aws:kms:eu-west-1:<ACCOUNT>:key/<KEY_ID>"

],

"NotAction": [

"s3:ListBucket",

"s3:GetObject",

"s3:PutObject",

"kms:Decrypt",

"kms:GenerateDataKey"

]

}

Here we only need one condition not to be satisfied in order to avoid the deny (which is what I did first):

- All the actions except the ones listed are allowed, provided that the resources are not the ones listed!

- The listed actions are allowed on all resources, except the ones that are listed!

VPC Endpoint Interface for S3

In order to reach the S3 bucket through the VPC endpoint interface, we use for exeample the following AWS CLI command:

aws s3 ls s3://<Bucket> --endpoint-url https://bucket.<vpc_endpoint_interface_dns_name>

IP-based restriction: we use an ingress rule to restrict the possible IP source adresses with a specific CIDR. **This is a key point to protection in place because as we will see the bucket will refuse any request that do not come from the VPC (and hence the VPC endpoint interface).

VPCE Policy:

- Resource Target Restriction: It is necessary to specify the names of the S3 buckets used by AWS services.

- Policy Restriction: The policy globally restricts access to AWS resources and access within the project account to resources in the project account (choice of restriction on the account rather than the organization). This can be refined later.

We use this example to produce the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowRequestsByOrgsIdentitiesToOrgsResources",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "*",

"Resource": "*",

"Condition": {

"StringEquals": {

"aws:PrincipalOrgID": "<my-org-id>",

"aws:ResourceOrgID": "<my-org-id>"

}

}

},

{

"Sid": "AllowRequestsByAWSServicePrincipals",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "*",

"Resource": "*",

"Condition": {

"Bool": {

"aws:PrincipalIsAWSService": "true"

}

}

},

{

"Sid": "AllowRequestsToAWSOwnedResources",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::packages.<region>.amazonaws.com/*",

"arn:aws:s3:::repo.<region>.amazonaws.com/*",

"arn:aws:s3:::amazonlinux.<region>.amazonaws.com/*",

"arn:aws:s3:::amazonlinux-2-repos-<region>/*",

.....

]

}

]

}

Note that we removed the not needed statements: we don’t need for example cross-account access.

S3 Bucket Policy

We start with this template and produce this policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "IdentityPerimeter",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::<BUCKET>",

"arn:aws:s3:::<BUCKET>/*"

],

"Condition": {

"Bool": {

"aws:PrincipalIsAWSService": "false"

},

"StringNotEquals": {

"aws:PrincipalOrgId": "<OrgID>"

}

}

},

{

"Sid": "NetworkPerimeter",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::<BUCKET>",

"arn:aws:s3:::<BUCKET>/*"

],

"Condition": {

"StringNotEquals": {

"aws:SourceVpc": "<VPC ID>"

},

"Bool": {

"aws:PrincipalIsAWSService": "false"

}

"ArnNotLike": {

"aws:PrincipalArn": [

"arn:aws:iam::<ACCOUNT>:role/<AUTHORIZED ROLE NAME>",

"arn:aws:iam::<TERAFORM RUNNER ASSUMED ROLE>",

]

}

}

},

]

}

We had to add an exception to the Network perimeter statement so that specific IAM identities can access the bucket without going through the VPC Endpoint:

- Requests made from AWS Console don’t go through VPC endpoints, so in order to see the bucket and its parameters on the console, we need to add the authorized role ARN. EDIT: another approach is to add an aws:SourceIP exception. It is common in enterprise networks that outbound Internet user requests go through a proxy, and we can use instead its CIDR. The upside is that you don’t have to update the policy if another IAM identity needs Console access.

- Deployments of resources are made with IaC (Terraform), and the requests don’t go trough VPCE, we also add the ARN of the role assumed by terraform.

Also, note the use of aws:sourceVpc instead of aws:sourceVPce: with sourceVpc, we don’t have to update the statement when the VPC endpoint is deleted and recreated (its id will change, but the requests will still be come from the same VPC).

Conclusion

Overall we have seen that multiple configuration steps are needed in order to properly restrict access to an AWS S3 bucket from an IAM Identity. The key here was to test each element separately: I had to experiment a lot on an separate AWS Account in order to verify all the parts and learnt a lot during the process.